Why Is AI Explainability Key In HR Decisions?

Updated : 4 months ago

So, we are finally here. Artificial Intelligence (AI) is now part of our daily lives. It’s being used in more than half of organizations worldwide. In HR, People teams are turning to AI for tasks like -

So, we are finally here. Artificial Intelligence (AI) is now part of our daily lives. It’s being used in more than half of organizations worldwide. In HR, People teams are turning to AI for tasks like -

- Hiring

- Engaging employees, and

- Creating fairer workplaces

For example, AI can help match the right person to the right job. It suggests career paths to employees. Today, it is reducing bias in hiring. Further, it highlights strong candidates who might otherwise be missed.

AI is useful because it speeds up many HR processes. It often makes them more effective. However, before using it, there are some important things to think about:

-

Bias in data: AI is capable of learning from past data. However, if that data contains bias, the system may repeat the same mistakes.

For instance, there are resumes, hiring decisions, or chatbot conversations. They are used to train the AI and may reflect human biases.

That’s why it’s important to check what data is being used. Also, if AI’s outcomes are fair and auditable.

-

Transparency: Employment laws protect people from discrimination. In case a candidate or employee challenges a decision -

How can your AI tool reach its conclusion?

If certain groups are being excluded, do you really know why? -

Ethics: Machines don’t have a sense of ethics

If HR’s goal is fair recruitment, can AI truly judge beyond qualifications and experience?

Does it really make sure bias is minimized?

Today, more companies are adopting AI. The understanding of how to use it responsibly is still catching up. Regulations are also evolving. And, there’s increasing focus on how AI is used in hiring, promotions, and other HR decisions.

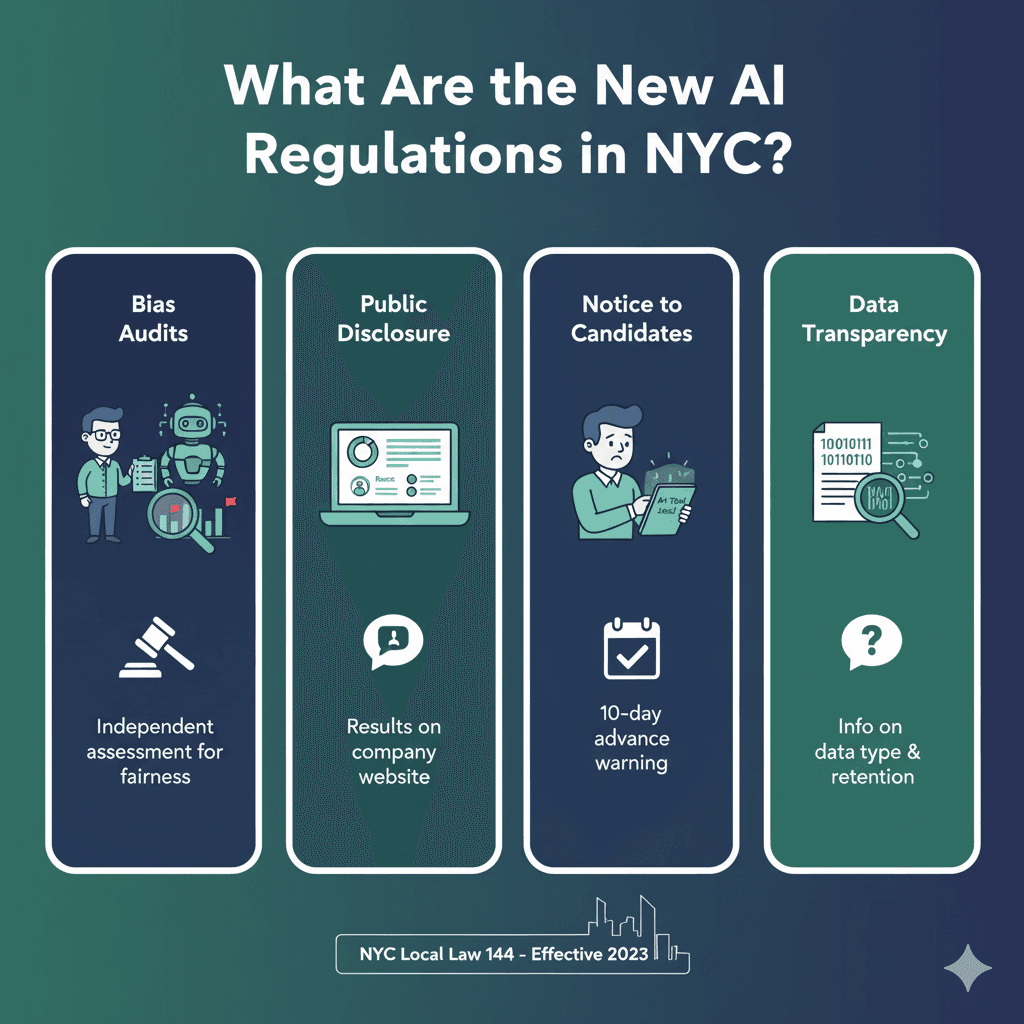

What Are The New AI Regulations in NYC?

In November 2021, the New York City Council passed a new law. It regulates how employers and employment agencies can use “automated employment decision tools” when making hiring decisions. The law came into effect on January 1, 2023.

In November 2021, the New York City Council passed a new law. It regulates how employers and employment agencies can use “automated employment decision tools” when making hiring decisions. The law came into effect on January 1, 2023.

Under the law, employers must:

- Do a yearly bias audit of their AI hiring tools. They need to make a summary of the results public.

- Notify candidates if AI will be used to analyze them.

- Offer alternatives. It allows candidates to request another method of assessment if they prefer.

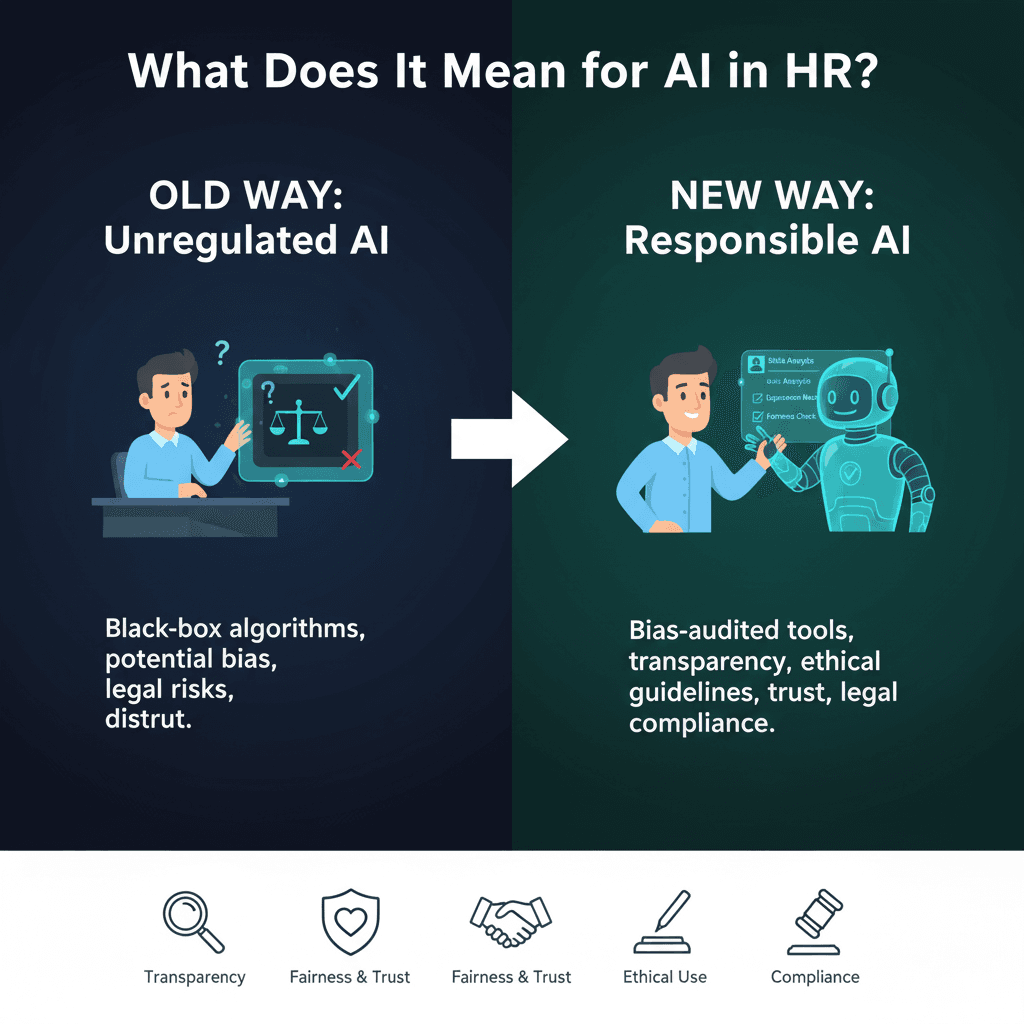

What Does It Mean for AI in HR?

AI isn’t being banned, it’s being regulated. The goal is to make hiring practices more fair, transparent, and accountable.

AI isn’t being banned, it’s being regulated. The goal is to make hiring practices more fair, transparent, and accountable.

For example, in New York:

- Any AI tool can be checked for bias before it’s in use

- Candidates must be told that an automated system is part of the process

- Employers must also share what job qualifications or traits an AI will use in its evaluation

- New York is leading the way. However, other states and countries are expected to follow with similar regulations soon

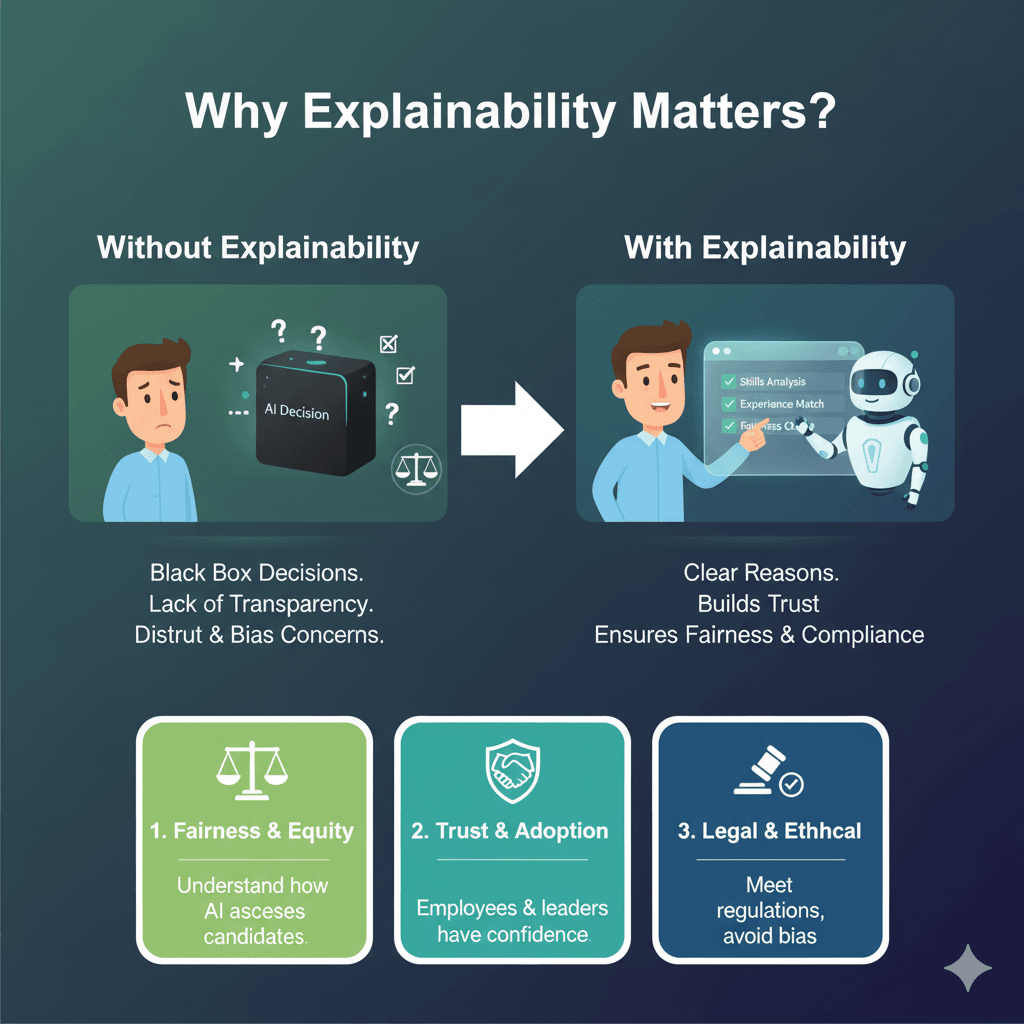

Why Explainability Matters?

These rules highlight a bigger challenge for HR teams. They prove that their AI systems are -

These rules highlight a bigger challenge for HR teams. They prove that their AI systems are -

- fair

- transparent, and

- ethical

Candidates, employees, and regulators all want reassurance that AI tools aren’t making biased or hidden decisions.

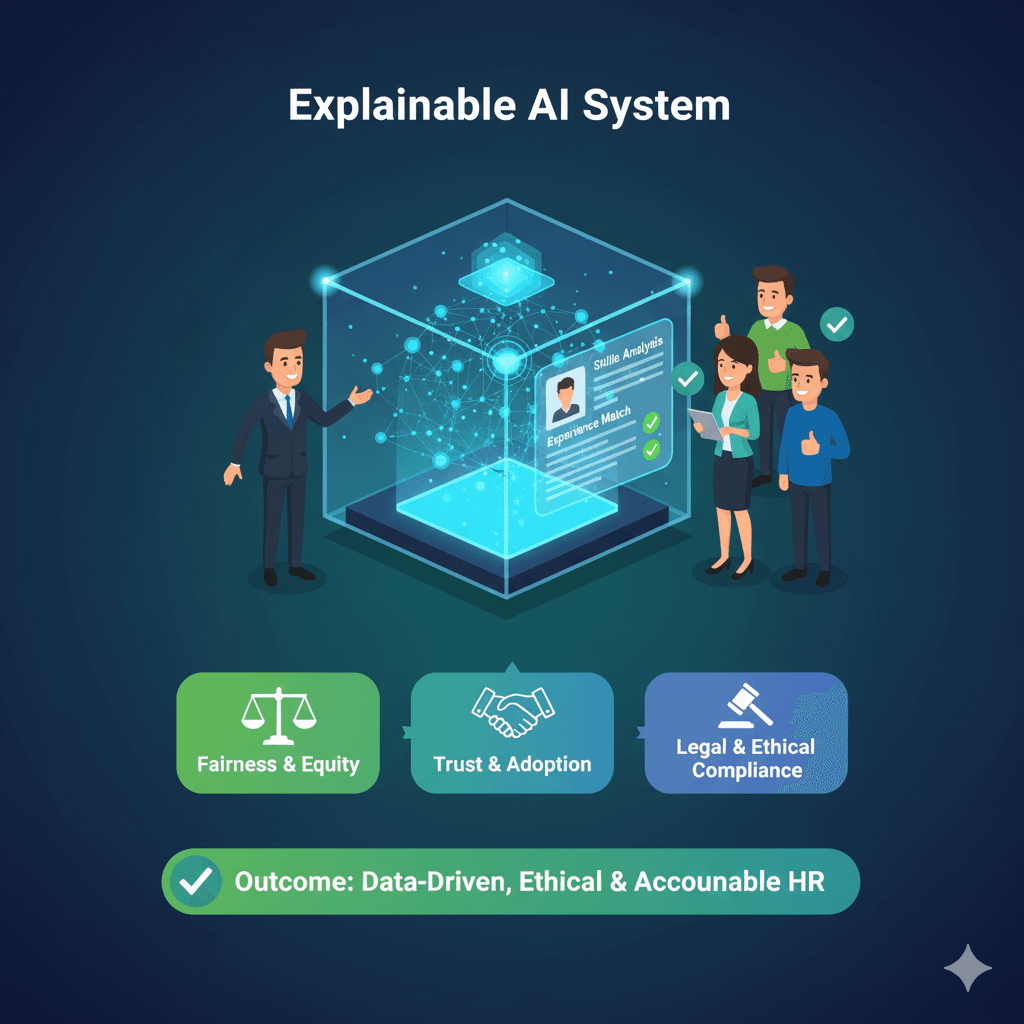

Explainable AI plays a big role here. It lets HR teams see -

- why a recommendation was made6

- what factors were considered, and

- how much weight each factor carried.

It ensures AI is used to speed up and improve the hiring process. However, only humans make the final decisions.

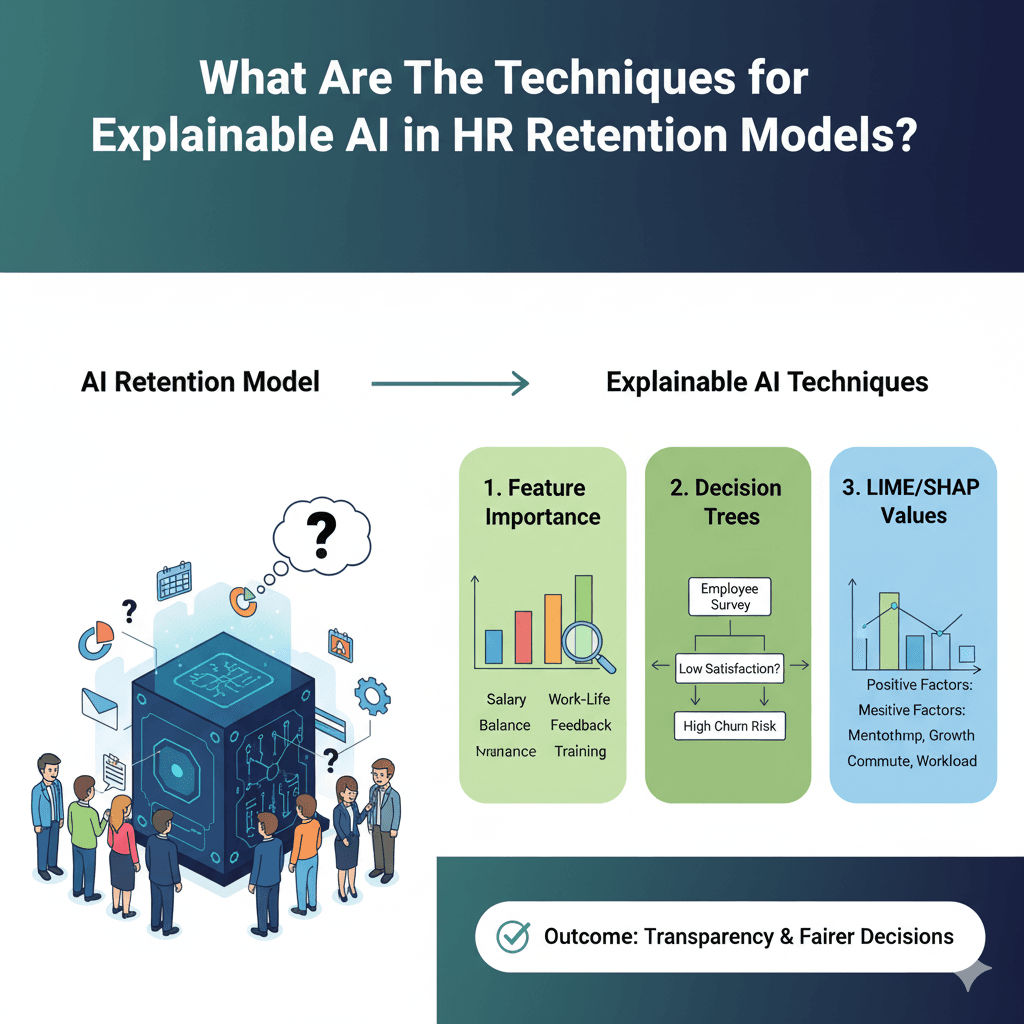

What Are The Techniques for Explainable AI in HR Retention Models?

When companies use AI to predict which employees might leave, the models can feel like a “black box.”

When companies use AI to predict which employees might leave, the models can feel like a “black box.”

If you want to build trust, HR teams need ways to understand how these predictions are made. There are various methods that now make AI more explainable. It helps organizations act with confidence.

SHAP (SHapley Additive exPlanations)

SHAP is a method from game theory. It shows how much each factor contributes to a prediction. In HR, SHAP can highlight why an employee might be at risk of leaving.

For example, it may reveal that a worker’s high risk comes from a lack of career growth and years without a promotion.

SHAP is powerful because it works on two levels:

- it can show overall workforce trends. Means the global view and

- also explain why a single employee is flagged. Means the local view

It makes it ideal for HR dashboards.

LIME (Local Interpretable Model-Agnostic Explanations)

LIME helps simplify complex models. It is done by creating easy-to-read versions of them for individual predictions.

You can think of it as zooming in on one case to see what’s driving the result.

For instance, if an employee is marked as high risk due to lower engagement scores, LIME might reveal that even a small change in that score could change the prediction.

It helps HR validate whether the data is reliable before taking action.

Counterfactual Explanations

Counterfactuals answer the “what if” question. It actually includes what small change could flip the prediction?

In HR, it could mean showing that if an employee had been given training last quarter. Also, their risk of leaving would be much lower.

The approach is especially useful for designing interventions and policies. The reason is it points directly to actions that could make a difference.

What Research Tells Us?

There is recent academic work on explainable AI in HR. It includes a 2024 study published in Decision Support Systems. It provides some powerful insights.

The research shows that explainability is “nice-to-have.” It’s a practical necessity for HR leaders.

Here are three key findings that HR teams can relate to:

Trust and Adoption Go Hand in Hand

When HR managers were given interpretable explanations, they were 30–40% more likely to trust. They act on AI predictions compared to when the same predictions were presented without explanations.

Those explanations can include SHAP values or counterfactual examples. In other words, the “black-box” effect makes HR teams hesitant. However, transparency encourages adoption.

Employee Acceptance Improves with Transparency

The study also found that employees were more open to AI-driven evaluations when they could see why a decision was made.

| For example, an employee flagged as a retention risk was less likely to push back when the AI clearly showed that low training participation and stagnant career growth were the drivers. |

As the authors note, “transparency bridges the gap between technical accuracy and human trust.”

Better Decision-Making, Not Just Compliance

Regulations already demand explainability, but the research suggests that explainability actually improves HR outcomes. Managers could design more targeted interventions with interpretable insights. It does not mean to provide generic “one-size-fits-all” policies, but offering -

- training or

- mentorship

The proactive approach reduced the predicted attrition rate in simulations run by the researchers.

Explainability Supports Ethical HR Practices

Beyond numbers, the study highlights that explainable AI helps companies align with their values. It allows HR teams to check for fairness. They validate whether certain groups are disproportionately flagged, and adjust policies before problems escalate.

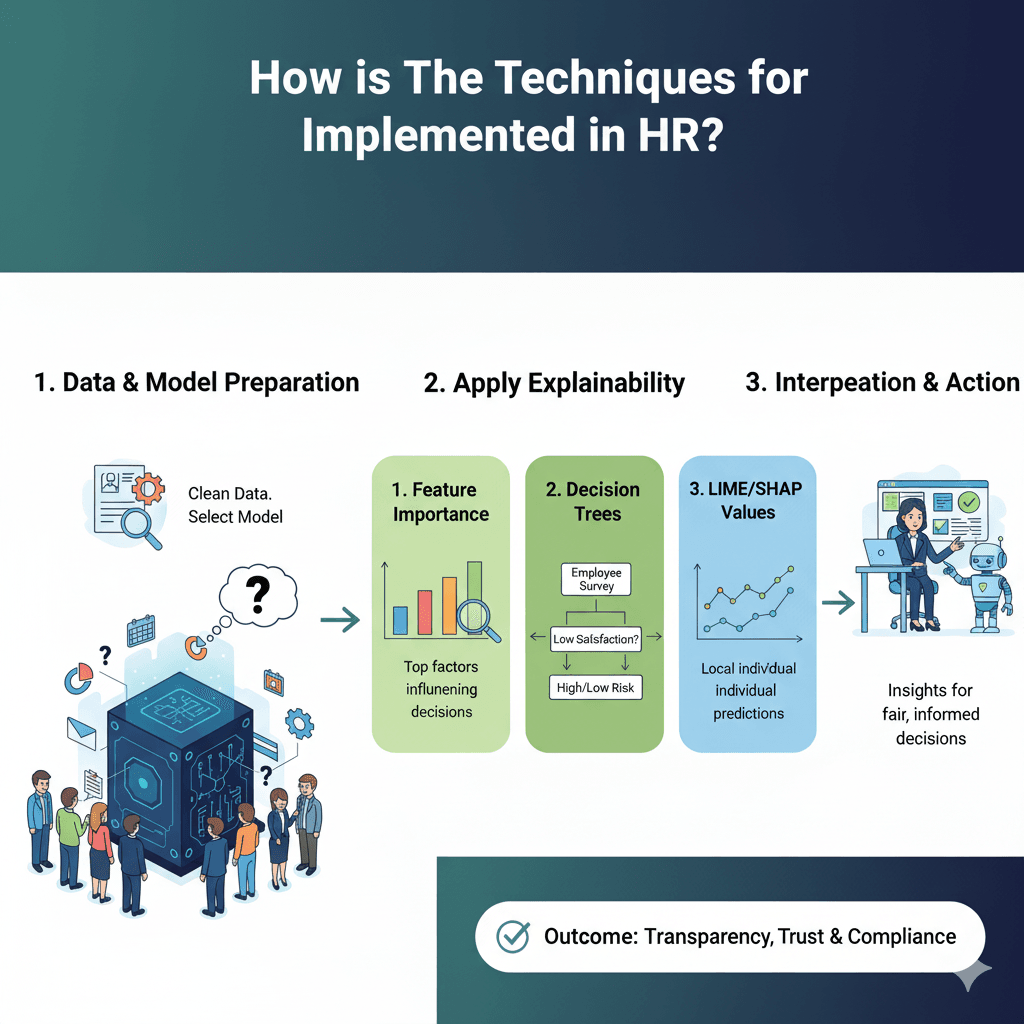

How is Explainable AI Implemented in HR?

Explainability should be built into every step of the process. It ensures that AI supports better decisions, protects employees, and follows the organizational goals.

Explainability should be built into every step of the process. It ensures that AI supports better decisions, protects employees, and follows the organizational goals.

1. Use Explainability From the Beginning

Explainability shouldn’t be an afterthought. HR and data teams should choose algorithms and tools that can be interpreted. It should be done when designing AI models for hiring, retention, or promotions.

The methods like SHAP, LIME, and counterfactual explanations should be part of the model design. So, the insights will be available as soon as predictions are made.

2. Insights

Not all stakeholders can interpret data the same way.

HR managers benefit from simple narratives or visual dashboards. It shows the key factors behind a prediction.

Data scientists need more technical output. Those include featuring important plots. These validate model behavior.

You need to customize explanations. It ensures that decisions are actionable and understandable across the organization.

3. Decision Making

HR professionals can make informed interventions. It can be done by combining model predictions with explainable insights.

Those include -

- targeted training,

- mentorship programs, or

- adjustments to workloads

The approach balances efficiency. It also considers ethical oversight and ensures accountability.

4. Audits and Fairness Checks

Even the best models can become slow over time or inadvertently introduce bias.

You need to regularly review predictions for fairness. Also, validate explanations. It helps HR teams catch unintended disparities. They can even adjust policies proactively.

5. Know Impact and Adjust

Make sure to implement metrics. It needs to check both accuracy and explainability:

- Are employees at risk of getting identified correctly?

- Are HR interventions based on the insights actually reducing biasness?

You can use the findings to refine the model and HR strategies. It helps create a feedback loop. At the end, there is an improvement in both AI performance and workforce outcomes.

6. Culture Transparency

Employees accept AI-assisted decisions when they understand the reason behind them.

Transparent communication also reassures regulators. It goes well with the emerging laws, like New York’s AI hiring regulations.

To Sum Up….

AI in HR makes decisions that people can trust. Explainability creates the gap between algorithms and accountability.

It turns complex predictions into clear, fair, and auditable insights. For HR leaders, it means being able to show not just what a system decided. But also why.

Today, laws, employees, and organizations all demand fairness, explainable AI is more than just a nice-to-have. It's the foundation of responsible HR decision-making.

Frequently Asked Questions

What is explainable AI in HR?

Explainable AI (XAI) helps HR teams understand how AI makes its decisions. Instead of working like a “black box,” it shows why a candidate was selected or rejected. It also shows what factors influenced that decision.

Is AI good or bad for HR?

AI can be very useful in HR. It can analyze data to measure employee performance. It also highlights where employees might need support. Further, makes performance reviews more accurate. Overall, it helps HR save time and make smarter decisions.

How can HR professionals learn AI?

HR professionals can start by joining AI-focused training programs. These programs teach the basics of data. It means how to use AI responsibly, and how to reduce bias in hiring. They also help HR leaders build confidence with new tools and become experts in using AI for people management.