Legal, Ethical, and Compliance Aspects of AI in HR

Updated : 4 months ago

Artificial Intelligence (AI) is reshaping HR at lightning speed. Everything is changing rapidly, including -

Artificial Intelligence (AI) is reshaping HR at lightning speed. Everything is changing rapidly, including -

- automating candidate screening

- managing payroll

- training

- employee engagement

However, power comes with responsibility.

| Every algorithm that makes a hiring decision, evaluates performance, or handles employee data should comply with strict - legal, ethical, and privacy standards. |

Let’s check out some key aspects of AI compliance in HR to help HR leaders adopt AI responsibly in 2025.

Why Does AI Compliance Matters in HR?

AI in HR goes beyond speeding up tasks. It influences -

AI in HR goes beyond speeding up tasks. It influences -

- who gets hired

- how employees are analyzed

- how careers progress

It means every decision an AI system makes can change people’s lives in very real ways.

Because of such an impact, regulators are also stepping in.

Laws like the EU AI Act and New York City’s Local Law 144 consider HR-related AI tools as high risk. They demand strict oversight, audits, and transparency.

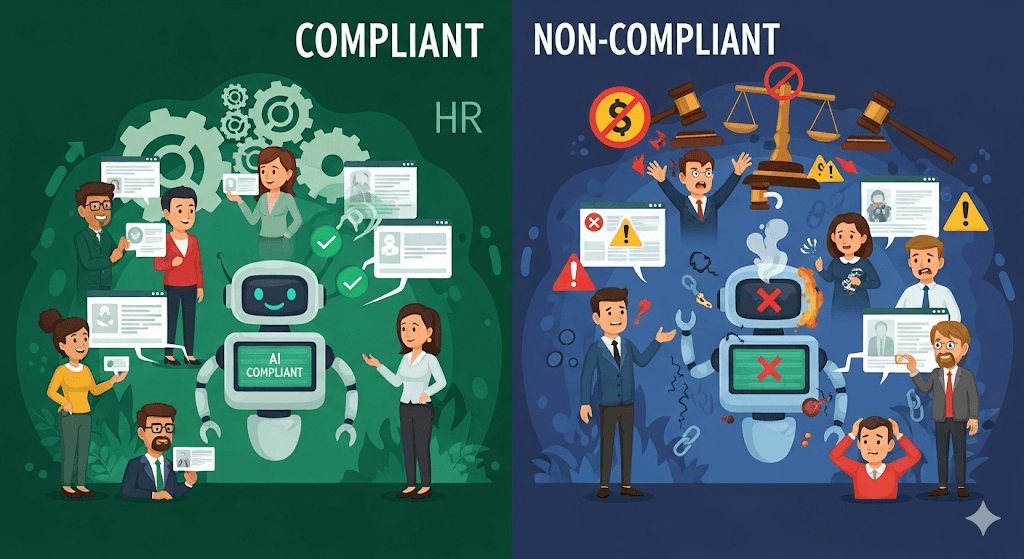

When you fail to comply isn’t just about fines. It can:

- Lead to biased or unfair hiring decisions

- Damage employee trust in HR processes

- Cause serious reputational harm to the organization

AI compliance in HR is not optional. It’s the foundation for fairness, trust, and legal safety.

HR Data Privacy and AI

AI runs on data. However, in HR, that data is highly sensitive. It includes personal details about employees and candidates.

If mishandled, these can create serious risks. That’s why privacy regulations like GDPR place strict obligations on companies.

If the organizations to stay compliant, it should:

- Protect data with encryption and anonymization

- Run DPIAs (Data Protection Impact Assessments) before using AI in recruitment or performance management

- Get clear consent for automated decision-making

- Provide a human review process so individuals can challenge AI-driven outcomes

| Whenever AI touches HR data, treat GDPR as your blueprint - make transparency, consent, and human oversight standard practices. |

Ethical AI in Recruitment

If one needs to know about recruitment, it is one of the most promising—and sensitive—applications of AI.

If one needs to know about recruitment, it is one of the most promising—and sensitive—applications of AI.

Some tools can dramatically speed up the hiring process. That too by reducing manual effort, and improving candidate experience. Those include -

- automated CV screeners

- chatbots

- video interview analyzer

However, they also carry significant ethical risks that need to be taken care of if not carefully managed.

The biggest concern is bias. If an AI system is trained on historical hiring data and reflects past discrimination, it may unintentionally take a negative turn. There are chances of excluding qualified candidates based on -

- gender

- ethnicity

- age

- other protected characteristics

It doesn’t just create fairness issues. But, it can expose organizations. It leads to legal challenges and damages their employer brand.

If you want to ensure AI recruiting remains ethical, HR teams should:

- Audit algorithms regularly. It helps detect and eliminate bias in outcomes

- Use diverse and representative datasets. It is particularly needed when training AI tools

- Keep humans in the loop for key hiring decisions. Don’t just rely solely on automation

- Be transparent with candidates. It can be done by disclosing when and how AI is being used in the process

Some governments are already moving to regulate this space.

| For example, New York City’s Local Law 144 requires companies to conduct independent bias audits of hiring algorithms before use. It’s a kind of regulation that is surely going to expand globally. It means the companies must focus on fairness and transparency when following AI practices. |

AI and Employment Law Compliance

AI in HR doesn’t operate in a legal vacuum. Organizations must ensure their tools comply with employment laws that are beyond data protection and ethics. You need to know that the laws are rapidly evolving worldwide. These laws aim to protect candidates and employees from unfair treatment when AI influences -

- hiring

- promotions

- workplace decisions

Some key examples include:

U.S. Federal Laws

-

ADEA (Age Discrimination in Employment Act): It Prevents algorithms from indirectly discriminating against older candidates.

-

FCRA (Fair Credit Reporting Act): It governs how background checks are conducted, even when automated by AI.

Illinois AI Video Interview Act

It requires employers to notify and obtain consent from candidates when using AI to evaluate video interviews. It is done to ensure transparency and fairness.

New York City Local Law 144

It makes annual bias audits mandatory for automated hiring tools. Further, it requires disclosure to candidates about AI’s role in the process.

Colorado (Effective 2026)

It introduces one of the most forward-looking AI employment laws. It obligates employers to demonstrate that their AI hiring systems are non-discriminatory and transparent.

European Union AI Act

It classifies HR AI systems like recruitment or employee monitoring tools as “high risk.” And, it means strict requirements for -

- audits

- documentation

- explainability

- human oversight

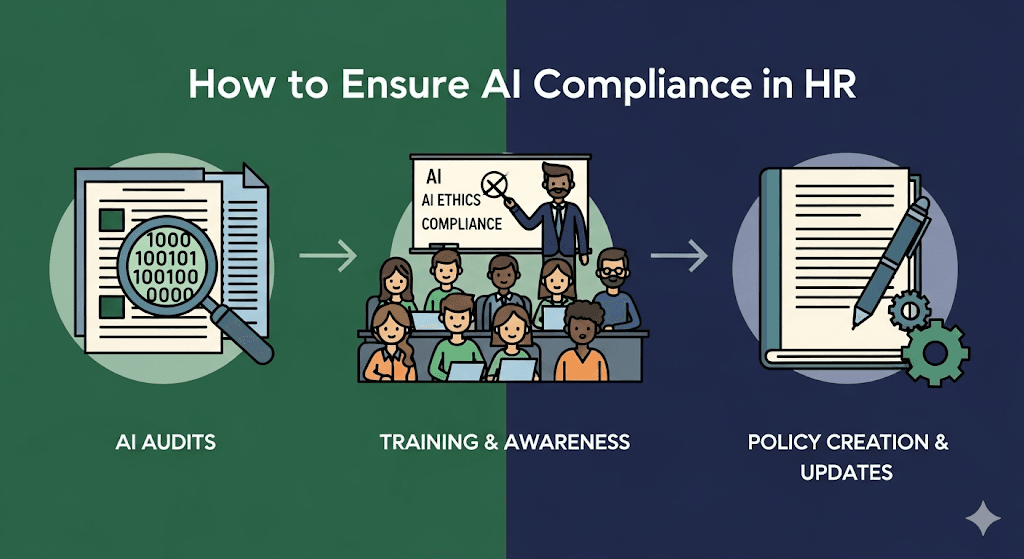

How to Ensure AI Compliance in HR?

Adopting AI in HR is much more than just buying the latest tools. Compliance means building -

Adopting AI in HR is much more than just buying the latest tools. Compliance means building -

- policies

- processes

- Safeguards

These protect people, data, and decisions. Here’s a structured framework HR leaders can follow:

1. Policy & Oversight

AI needs clear rules. HR departments should:

- Define where and how AI will be used in HR processes. For e.g. in the recruitment, performance reviews, payroll.

- Assign accountability to HR, legal, and compliance teams.

- Establish an AI ethics committee to review and monitor system use.

It ensures there’s always human oversight and responsibility. Not just automation.

2. Monitor Bias & Fairness

Algorithms should be regularly checked for fairness. Organizations should:

- Conduct bias audits on recruitment and promotion tools.

- Retrain AI models with diverse and representative datasets.

- Use fairness testing tools. Those include uRecruits to detect discrimination early.

3. Transparency & Communication

Trust in AI starts with openness. Companies should:

Disclose AI usage in -

- job postings

- candidate communications

- HR policies

Use explainable AI so HR professionals can justify outcomes to employees and regulators.

4. Data Protection

AI runs on sensitive HR data. So, you need to protect it and it’s non-negotiable:

- Encrypt and anonymize personal data where possible.

- Restrict access to any sensitive information to authorized staff only.

- Keep the details and documentation of data flows and storage practices for audit readiness.

You need to know that strong privacy controls reduce the risk of fines and reputational damage.

5. Training & Continuous Improvement

Compliance isn’t a one-time effort. It’s ongoing:

You need to train HR teams on -

- AI ethics

- legal requirements

- system limitations

Make sure to use new AI tools in small groups before you ask employees to use company-wide. Lastly, you need to continuously update policies as laws and regulations evolve.

The Need for Responsible AI in HR

AI in HR is different from AI in other domains. Mostly, the technical fields often operate on hard facts and clear data points. However, HR deals with human lives, behaviors, and emotions that don’t fit neatly into algorithms. That’s why responsible AI adoption in HR isn’t optional; it’s essential.

If implemented carelessly, AI can -

- erode trust

- perpetuate bias

- damage workplace culture

Here are some key challenges organizations must address:

1. Explainability Gaps in HR Systems

AI-driven tools in recruitment, promotions, or performance reviews usually function as “black boxes.”

When outcomes can’t be explained, employees and managers lose trust. Moreover, many laws need transparency in automated decisions. These include -

- GDPR

- EU AI Act

HR leaders ensure AI systems deliver accurate results. However, these also explain how those results are reached.

2. Bias and Inequality Risks

Poorly trained algorithms can embed discrimination into HR processes. A well-known example occurred in 2018 when Amazon’s AI hiring tool was found to downgrade resumes.

It mentioned “women’s,” effectively disadvantaging female applicants. Even if it was designed to improve efficiency, the system reproduced historic biases. It shows why regular audits and diverse training data are important for fairness.

3. Data Privacy and Compliance Pressures

The rise of generative AI coincides with stricter privacy legislation.

In the U.S. alone, 13 states have passed comprehensive data protection laws in the past three years. However, global frameworks like GDPR enforce equally tough standards. These laws often target AI directly. But they need -

- Consent for data use

- Safeguards around sensitive HR information

- Clear processes for handling data rights requests

For HR, it means every AI initiative must be built with data protection by design to avoid legal and reputational fallout.

What Are The Legal Challenges?

Using AI in HR brings many legal responsibilities. Organizations must make sure their AI systems follow laws about data protection, discrimination, labor rights, and overall legal compliance. Here’s a breakdown:

1. Data Protection Laws (like GDPR)

Handling employee data comes with strict rules. For example, the EU GDPR requires companies to:

- Get clear consent before collecting personal data.

- Use data only for legitimate purposes.

- Keep data safe through encryption, anonymization, and secure storage.

Non-compliance can lead to hefty fines and legal trouble. Companies should also appoint Data Protection Officers (DPOs) to oversee compliance and run regular training so employees understand data privacy rights.

2. Anti-Discrimination Laws

AI in HR must not discriminate against anyone based on race, gender, age, or other protected characteristics.

Biased AI can lead to lawsuits and harm a company’s reputation. To prevent this, organizations should:

- Train AI on diverse and representative datasets.

- Conduct regular bias audits.

- Involve cross-functional teams in AI development and oversight.

- Allow employees to report perceived bias in AI decisions.

It ensures AI is fair, inclusive, and legally compliant.

3. Labor and Employment Laws

AI tools that monitor performance or manage employees must follow labor laws. These protect privacy, fair working hours, rest periods, and overtime rules. Excessive monitoring can create a hostile work environment. Organizations should:

- Regularly review AI systems for compliance.

- Set clear policies for AI-driven performance monitoring.

- Ensure AI supports fair labor practices, rather than violating them.

4. Legal Liability and Compliance

Companies must take responsibility for the AI they use. It means:

- Conducting regular audits. It helps check data handling, decision-making, and employee impact.

- Fixing any legal issues identified during audits.

- Establishing policies and guidelines for AI use, defining the roles of HR, AI developers, and legal teams.

- Keeping up-to-date with changing laws and regulations to stay compliant.

How to Mitigate Risk When Using AI in HR?

1. Understand Your Current Use of AI

Before anything else, companies need a clear picture of where and how AI is being used in HR. Many businesses already use tools for -

- recruitment

- onboarding

- performance tracking

- employee management

Those are sometimes used without realizing the legal implications.

Action step: Create an inventory of all AI tools in use. Ask HR teams, hiring managers, IT, and security departments to fill out a short survey about -

- the tools they use

- what data is collected

- how it’s applied

It gives you a baseline to spot risks early.

2. Keep Up with New Rules and Regulations

AI laws are changing fast worldwide:

In Europe:

-

The EU AI Act (effective Aug 2024, enforcement begins 2026) is the first big law dedicated to AI.

-

It ranks AI systems by risk. Some, like emotion-recognition tools at work, are completely banned.

Recruitment and some HR tools are marked “high risk.” It means employers must document how they use them, run data protection assessments, and ensure a human has the final say.

GDPR rules (data accuracy, transparency, purpose limitation, etc.) still apply. In some countries, you may even need to consult with worker representatives before rolling out a new AI system.

In the US:

There’s no single AI law yet, but existing ones still apply. Agencies like the FTC and Department of Labor are watching closely, and the Fair Credit Reporting Act (FCRA) must be followed if AI helps with hiring decisions.

State laws are moving quickly:

-

Colorado & Illinois: Make it illegal to use HR AI tools that discriminate by protected categories. Illinois’ new law takes effect Jan 2026. Employers must also inform applicants/employees if AI is used in decisions.

-

New York City: Employers using AI for hiring or promotions must publish an independent bias audit of the tool on their website.

3. Only Collect Data You Really Need

Employers should check what data the system collects and why before they use any of the AI tools.

Make sure every piece of personal information has a clear purpose. Both GDPR and California’s CCPA emphasize data minimization. It means you should only process data that is necessary and proportional for the task at hand.

4. Keep HRs in the loop

AI can help with hiring and HR decisions. However, humans should always oversee the process. The U.S. Department of Labor recommends creating governance structures to monitor AI systems and making sure HR staff or managers can review decisions before they affect employees.

The principle aligns with rules in New York City, Colorado, and Illinois — and more regulations are expected. You need to train your team on AI use is essential to ensure fairness and compliance.

5. Check and document risks

Organizations should review AI tools for potential legal, ethical, or reputational risks. Those include data privacy, cybersecurity, intellectual property, and employment law issues. Many current and upcoming laws will require formal risk assessments.

So, how to stay on top of AI compliance?

-

Know the law: Understand all regulations that apply to your workforce across different regions.

-

Know what to do: Follow practical implementation steps and compliance checklists. It can include discussions with worker representatives when needed.

-

Know how to document: Maintain clear records, policies, notices, consents, and training programs to show you are compliant and responsible.

Case Studies

Here are some real-world examples of AI in HR. It can help understand the ethical and legal challenges companies face. You learn about the best practices for using AI responsibly.

Success Stories

Unilever

Unilever uses AI in recruitment through video interviews and gamified assessments to evaluate candidates’ skills and personality traits.

AI analyzes facial expressions, tone, and word choice, while gamified tests check cognitive and personality abilities.

The results:

- Time-to-hire dropped from 4 months to 4 weeks.

- Bias in hiring reduced, promoting diversity.

- All candidates are assessed fairly using objective, standardized tools.

Hilton Worldwide

Hilton uses AI chatbots to interact with candidates and guide them through applications. It improved the candidate experience.

AI also analyzes employee feedback and engagement. It helps HR identify areas to improve workplace culture.

The results:

- HR teams save time for strategic work.

- Better employee engagement and talent retention.

Failures and Controversies

Uber

Uber’s AI system monitored driver performance but was seen as intrusive and unfair, penalizing drivers for things like traffic delays. This caused stress and dissatisfaction.

Lesson: AI surveillance can violate employee rights if not implemented carefully. Uber revised its system to make it fairer and more transparent.

Key Ethical and Legal Lessons

Ethical Lessons

- Always use diverse and representative datasets.

- Conduct regular bias audits.

- Include diverse teams in AI development to reduce bias risks.

Legal Lessons

- Comply with data protection laws like GDPR.

- Get explicit consent before collecting employee data.

- Use encryption, anonymization, and secure storage.

- Perform regular compliance checks to avoid legal risks.

Finally…

AI in recruitment has the potential to transform the hiring process, making it much faster and more efficient.

For instance, Unilever’s use of AI in hiring has shown that the time it takes to fill positions can be reduced by as much as 75%, dramatically speeding up recruitment. At the same time, organizations can make AI fairer by training it on diverse datasets, which studies show can lower bias in hiring decisions by about 40%.

While following data protection rules like GDPR may increase operational costs by 10–20%, this investment helps organizations avoid legal penalties and ensures that AI is used responsibly and ethically.

Frequently Asked Questions

1. Why is AI compliance important in HR?

Because HR decisions directly impact people’s careers and privacy. If there is any Non-compliance, it can lead to biased hiring, legal penalties, and reputational damage.

2. What are the main laws regulating AI in HR?

Key examples include -

- GDPR (EU/UK)

- CCPA (California)

- Title VII of the Civil Rights Act (U.S.)

- NYC Local Law 144

- Illinois AI Video Interview Act

- the EU AI Act

3. How can HR teams reduce bias in AI recruitment tools?

It is done by -

- conducting regular audits

- training AI on diverse datasets

- keeping human oversight in decision-making processes

4. Does GDPR apply to AI-driven HR systems?

Yes. GDPR requires transparency, consent, data minimization, and the right to contest automated decisions.

5. What risks do companies face if they don’t comply with AI regulations in HR?

Risks include -

- legal fines

- lawsuits

- reputational damage

- employee distrust

- biased outcomes

These could harm workplace culture.

6. How do upcoming AI regulations (like the EU AI Act) affect HR?

They classify HR-related AI tools as “high risk” and require companies to implement audits, explainability, documentation, and human oversight.